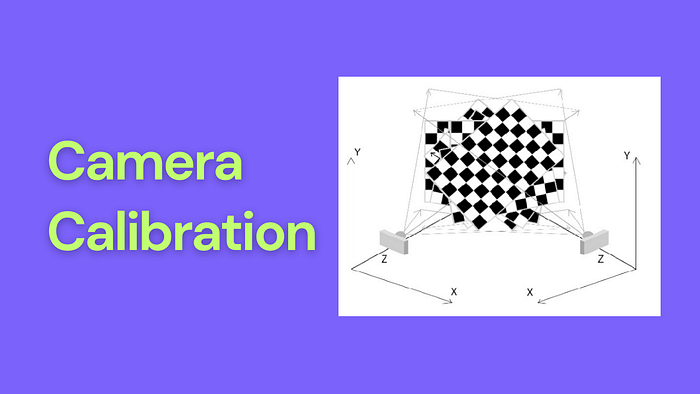

Camera Calibration Explained: Enhancing Accuracy in Computer Vision Applications

Have you ever wondered why some of your photos look distorted, with curved lines that should be straight?

Or why objects appear closer or farther than they actually are? The answer lies in camera calibration, a fascinating process that helps us bridge the gap between how cameras see the world and how it really is.

What is Camera Calibration?

Think of camera calibration like getting your eyes checked at an optometrist. Just as an eye doctor measures how your eyes process visual information, camera calibration helps us understand how a camera transforms the 3D world into 2D images. This understanding is crucial for applications ranging from smartphone photography to self-driving cars.

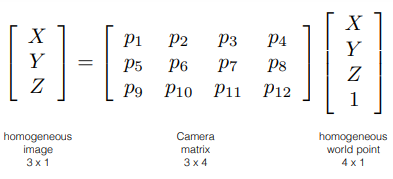

At its core, camera calibration relies on a surprisingly elegant mathematical equation: x = PX

xrepresents the 2D point in your imagePis the camera matrix (containing all camera parameters)Xrepresents the 3D point in the real world

Think of the camera matrix P as your camera's personal "recipe" for converting 3D scenes to 2D images. This matrix contains two crucial sets of ingredients:

1. Intrinsic Parameters (The Camera’s “Personality”)

- Focal Length: How “zoomed in” your view is

- Principal Point: Where the center of the image truly lies

- Skew: How the pixels are shaped and aligned

- Aspect Ratio: The relationship between pixel width and height

2. Extrinsic Parameters (The Camera’s “Position”)

- Rotation: Which way the camera is pointing

- Translation: Where the camera is located in space

Why Do We Need Camera Calibration?

Imagine trying to measure the distance between two buildings using only a photograph. Without proper calibration, you might get completely wrong measurements! Here’s why camera calibration matters:

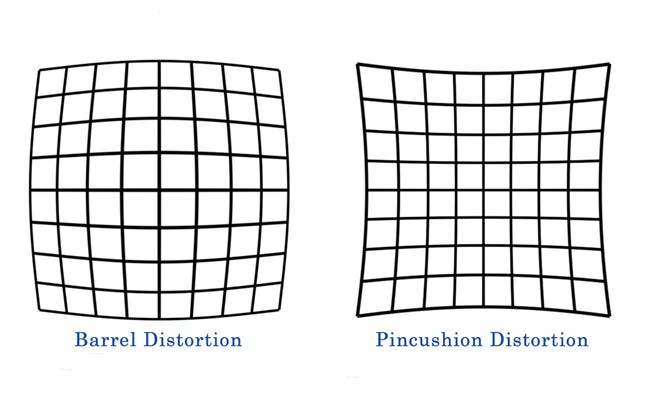

- Lens Distortion Correction: Ever noticed how wide-angle selfies make faces look slightly warped? That’s lens distortion at work.

- Accurate Measurements: Essential for industries like manufacturing and construction.

- Enhanced Computer Vision: Helps AI systems accurately interpret the world through cameras.

- Improved Augmented Reality: Makes virtual objects align properly with the real world.

How to Calibrate Your Camera: A Step-by-Step Guide

To calibrate a camera, follow these steps:

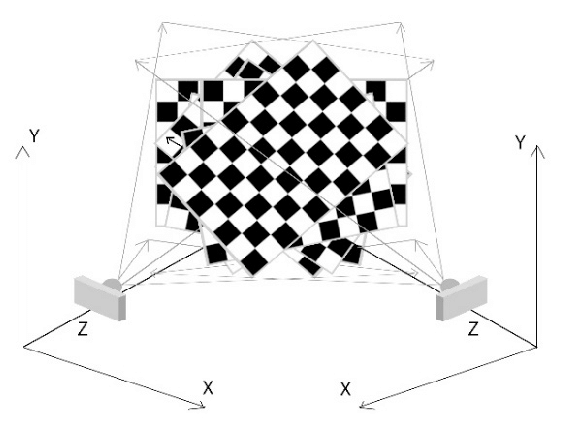

1. Prepare a Calibration Target: The most common target used is a chessboard pattern. You can print this pattern on paper or use a physical board with squares of known dimensions.

2. Capture Images: Take multiple images of the chessboard from different angles and distances. Ensure that the entire pattern is visible in each image.

3. Detect Corners: Use software tools to detect corners of the squares on the chessboard in each image.

4. Calculate Parameters: Using detected corners and known dimensions of the squares, compute intrinsic and extrinsic parameters using algorithms such as Zhang’s method or OpenCV functions.

5. Evaluate Calibration: Check the accuracy of your calibration by calculating reprojection errors.

A Simple Python Implementation

Here’s a practical example using OpenCV to calibrate a camera:

import cv2

import numpy as np

import glob

# Termination criteria for corner sub-pixel accuracy

criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 30, 0.001)

# Prepare object points (3D points in real-world space)

objp = np.zeros((6*7, 3), np.float32)

objp[:, :2] = np.mgrid[0:7, 0:6].T.reshape(-1, 2)

# Arrays to store object points and image points

objpoints = [] # 3D point in real-world space

imgpoints = [] # 2D points in image plane

# Load images

images = glob.glob('path/to/calibration/images/*.jpg') # Update with your path

for img in images:

gray = cv2.imread(img)

gray = cv2.cvtColor(gray, cv2.COLOR_BGR2GRAY)

# Find the chessboard corners

ret, corners = cv2.findChessboardCorners(gray, (7, 6), None)

if ret:

objpoints.append(objp)

corners2 = cv2.cornerSubPix(gray, corners, (11, 11), (-1, -1), criteria)

imgpoints.append(corners2)

# Calibrate the camera

ret, mtx, dist, rvecs, tvecs = cv2.calibrateCamera(objpoints, imgpoints, gray.shape[::-1], None, None)

print("Camera matrix:\n", mtx)

print("Distortion coefficients:\n", dist)Source: OpenCV

Preparing Your Own Images

To prepare your own calibration images:

- Print a chessboard pattern or create one digitally.

- Ensure good lighting conditions while capturing images.

- Capture at least 10–15 images from various angles to achieve accurate results.

- Save these images in a dedicated folder for easy access during coding.

Practical Applications of Camera Calibration

Camera calibration has numerous practical applications across various fields. Here are some key areas where it plays a crucial role:

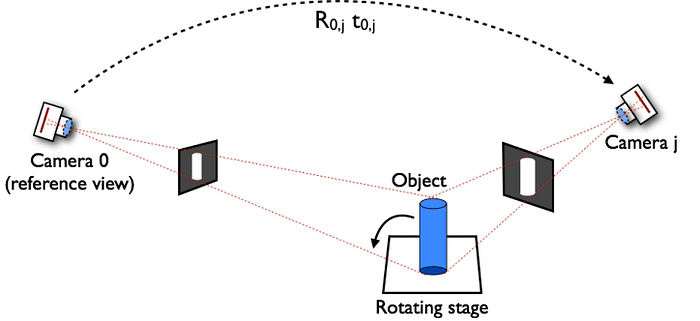

1. 3D Reconstruction

In applications such as virtual reality (VR) and augmented reality (AR), accurate 3D reconstruction is essential. Camera calibration allows systems to create precise 3D models from multiple 2D images by understanding how different images relate to one another in three-dimensional space. This is particularly important in gaming and simulation environments where realistic interactions are required.

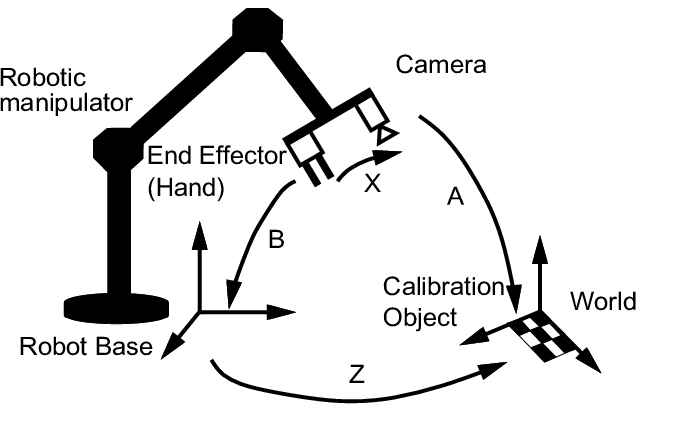

2. Robotics

Robots rely heavily on visual input to navigate and interact with their environment. Camera calibration enables robots to accurately perceive distances and sizes of objects in their surroundings. For instance, in autonomous vehicles, calibrated cameras help determine the position of obstacles and other vehicles, facilitating safe navigation.

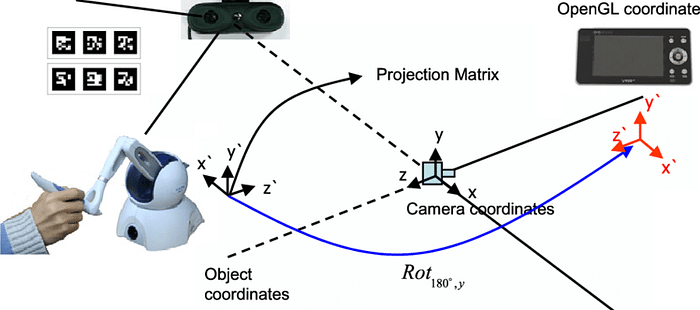

3. Augmented Reality

In augmented reality applications, overlaying digital information onto the real world requires precise alignment between virtual elements and real-world objects. Camera calibration ensures that the virtual content appears correctly positioned and scaled relative to the physical environment, enhancing user experience.

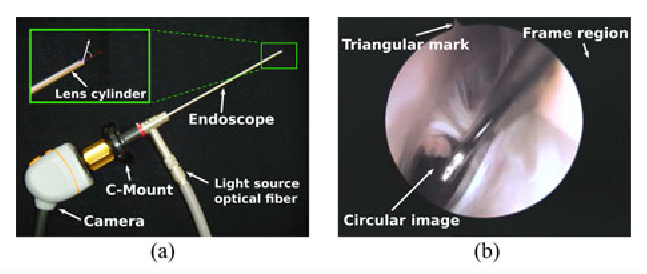

4. Medical Imaging

In medical imaging technologies such as endoscopy or radiology, camera calibration is crucial for accurate diagnosis and treatment planning. It ensures that images reflect true anatomical dimensions, aiding healthcare professionals in making informed decisions.

5. Sports Analysis

Camera calibration is used in sports technology to analyze player movements and game dynamics. By calibrating cameras positioned around sports fields or arenas, analysts can capture precise movements of players and equipment, leading to better training methods and strategies.

Conclusion

Camera calibration might seem complex at first, but it’s a fundamental process that helps us get the most accurate information from our cameras. Whether you’re a hobbyist photographer or developing computer vision applications, understanding camera calibration is crucial for achieving precise results.

Remember, perfect calibration takes practice. Start with the basics, experiment with the provided code, and gradually work your way up to more complex applications.

Reference

- https://docs.opencv.org/4.x/dc/dbb/tutorial_py_calibration.html

- https://www.youtube.com/playlist?list=PL7Fxe7FxChBa8DWnKQ0if4s8eBEOOVOdn